A while back, Michael Quinn Patton shared a YouTube video on a minimum specification (essential elements) for Utilization-Focused Evaluation.

Inspired by Michael’s work, I created this 8-minute video on a “min spec” for better value for money assessment. It’s been sitting on YouTube and I’ve felt a bit sheepish about sharing it but now, in a strong vote of confidence, Jane Davidson and Thomaz Chianca have included it in their superb new online training course, Impact Evaluation Without Tears! So here it is for you, too:

If you prefer reading to watching a video, the rest of this post is for you. It explains the same principles in different words (so there’s value in checking out both the video and the written summary).

Big picture

If you’re familiar with my posts, you’ll know I prefer the term Value for Investment (VfI), and I’ll refer to VfI (instead of value for money) throughout the following discussion - because it’s never just about the money.

Policies and programs aren’t just costs - they’re investments in value propositions. They create value from all the resources invested in them - not just money but people, relationships, inspiration, perspiration, etc. They create many kinds of value - e.g., social, cultural, environmental, and economic. They create value not just by maximising efficiency but balancing multiple considerations like equity, sustainability, ethical practice, human dignity and rights, and more. Money is a valid way of measuring value, but it’s just one way. Value isn’t money.

VfI assessment is evaluation. Examples of evaluative questions about VfI include:

How well are we using resources?

Does the resource use create enough value?

How can we create more value from these resources?

All the great stuff we already do as evaluators applies to VfI evaluations. What we need is a system to help us apply an appropriate mix of methods and tools for our context, guided by a set of principles and a process.

Four principles…

I emphasise the following four principles for better evaluation of resource use:

Inter-disciplinary (evaluation and economics)

Mixed methods (qualitative and quantitative)

Evaluative reasoning (evidence and explicit values)

Participatory (co-design and sense-making).

Let me explain why.

Inter-disciplinary (evaluation and economics)

VfI (good resource use) is a shared domain of evaluation (the systematic determination of merit, worth and significance) and economics (the study of how people choose to use resources).

Determining “the merit, worth or significance of resource use” involves answering an evaluative question about an economic problem, drawing on theory and practice from both disciplines.

Evaluation and economics share an interest in resource use and value creation. Both disciplines bring complementary models, frameworks, techniques, tools, and insights, while neither discipline alone provides a comprehensive answer to a VfI question. We can strengthen VfI evaluations by using economic and evaluative ways of thinking throughout the process including program theory, rubrics, evidence gathering, methods of analysis, judgement-making, and reporting.

You don’t have to use economic methods of evaluation (like cost-benefit analysis or cost-effectiveness analysis) in a VfI evaluation, though it’s often a valid and useful perspective to include. These methods principally give us indicators of efficiency, and they’re not enough on their own. For example, good resource use also involves balancing equity and other criteria.

Bottom line: the VfI system combines evaluative and economic ways of thinking about resource use and value creation.

Mixed methods (quantitative and qualitative)

Good VfI evaluation recognises the value of both quantitative and qualitative forms of evidence, the importance of matching methods to context, and the value of combining them. Often this helps an evaluation to reach and communicate a deeper understanding than we could get from using any one method alone.

Mixed methods, as Jennifer Greene has written, can enhance evaluation in multiple ways. For example, triangulating evidence from different sources can reveal areas where insights converge or diverge. Drawing on complementary strengths of different sources helps to gain a broader and deeper understanding of the processes through which a program creates value. The results from one method can be used to inform the design of another. Incorporating diverse perspectives can help to identify areas needing further analysis or reframing.

Consequently, mixed methods can strengthen the reliability of data and the validity of findings and recommendations. Mixed methods help us to understand the story behind the numbers.

Evaluative reasoning (evidence and explicit values)

Evaluation involves combining empirical evidence with explicit values to make judgements about the merit, worth, significance, or value of something – in this case, resource use.

This process is underpinned by four distinct steps:

identifying criteria (aspects of value),

developing standards (levels of value),

gathering and analysing evidence, and

synthesis – interrogating the evidence through the lens of the criteria and standards to make evaluative judgements.

These four components (criteria, standards, evidence, and synthesis) are built into good VfI evaluation. In practice, this often involves developing one or more rubrics.

Participatory (co-design and sense-making)

Thomas A. Schwandt (2018) described evaluative thinking as “a collaborative, social practice”. This phrase aptly describes the recommended way to approach a VfI evaluation. At every step of the process there are opportunities (or obligations) to include stakeholders, end-users of the evaluation, communities, and others with a right to a voice.

Program Evaluation Standards offer guidance on the features of good evaluations, including engaging stakeholders to ensure evaluations are contextually viable and meaningful for the people whose lives are affected by the program and the evaluation.

An inclusive, participatory approach supports stakeholders’ understanding of evaluation, their ownership of the evaluation processes and products, their input into how it’s designed and conducted, the credibility and validity of the evaluation findings, and makes it more likely that the evaluation will be useful and actually used.

Ultimately, it is not the values of the evaluation team that matter in evaluation – it’s the values of affected people and groups – so including their perspectives when developing and using evaluation frameworks is paramount.

…and a process

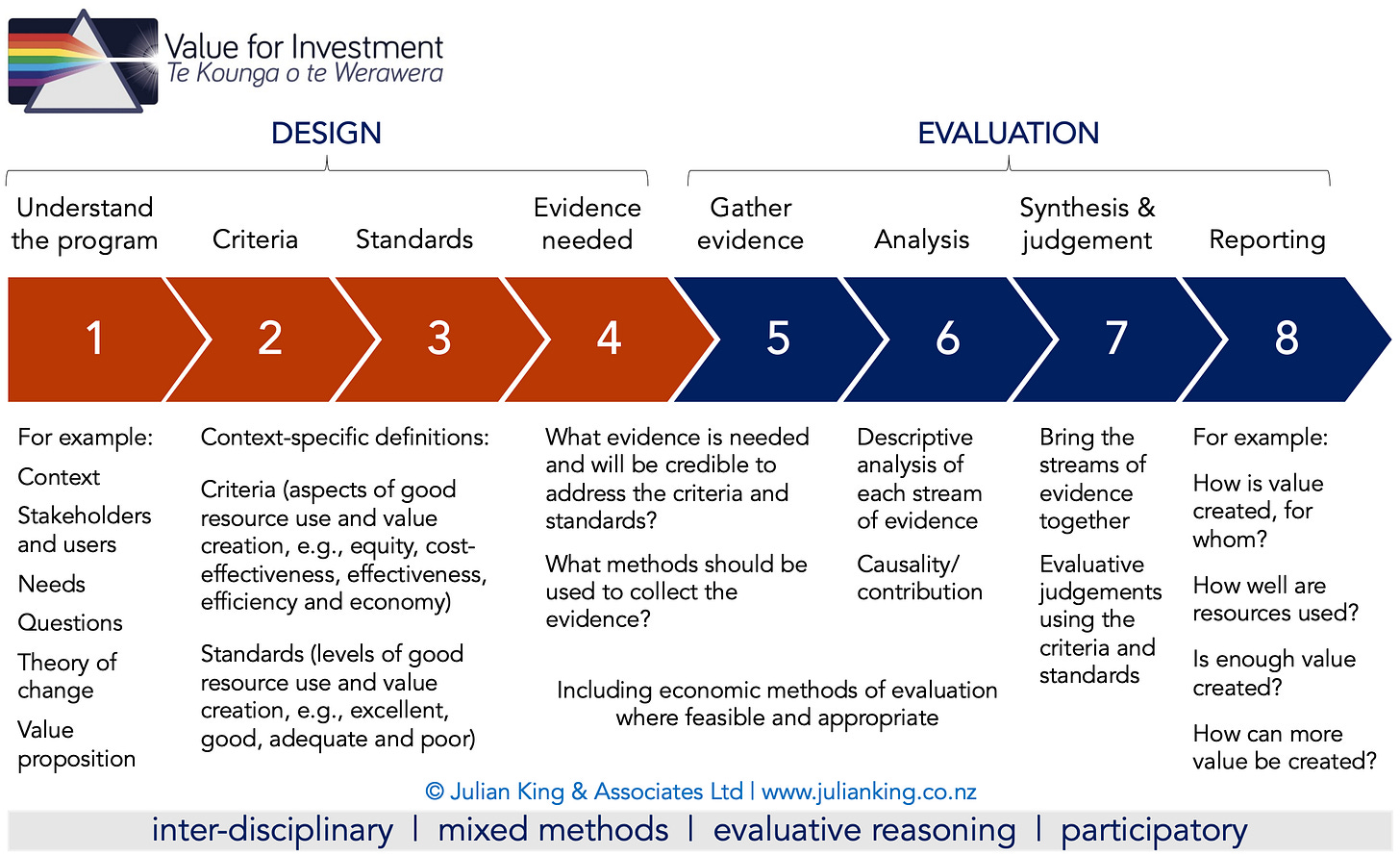

The following stepped approach makes VfI evaluation intuitive to learn, practical to use, and accessible for people who are new to evaluation. You’ll see the four components of evaluative reasoning (criteria, standards, evidence, and synthesis) built into these steps. Follow this link for a worked example, applying the 8 steps in a social and economic impact assessment.

The Value for Investment system

These four guiding principles (interdisciplinary, mixed methods, evaluative reasoning, participatory) and the 8-step process are core parts of the Value for Investment system.

The VfI system isn’t another method. Evaluators already have the methods and tools to answer evaluative questions about resource use and value creation. This is a system to guide us in using an appropriate mix of methods and tools for our contexts, and to work reflectively and reflexively to provide clear answers to VfI questions.

The VfI system is designed to disrupt value for money assessment, making it more valid, more credible, more value-focused and, ultimately, more useful.

This approach was developed and tested through doctoral research, is open-access, and in widespread use. Could your evaluation be next to use the VfI system?

VfI resources and training

Free resources on the VfI approach are available on my website.

Training workshops are offered through evaluation associations globally (ask your association to book me in)

VfI is included in the online Master of Evaluation program at the University of Melbourne.

Private training is available (online or in-person) and can be customised to fit your needs, with workshops ranging from 3 hours to 2 days.

Contact me to inquire about training and mentoring opportunities.

Coming up: Half-day workshop at AES23. Details here.