Different kinds of rubrics

Generic, analytic, holistic, orbic, asterisc, escalieric, cephalopodic…

Rubrics are versatile things. They’re used in fields as diverse as teaching, geology, medicine, meteorology, evidence appraisal, and causal inference. They can be used to help evaluators judge the value of things. They can also be used to elicit evaluative data from stakeholders, like this example from Kate McKegg.

This post focuses on evaluation rubrics, developed with stakeholders to provide an agreed (inter-subjective) basis for judging merit, worth or significance of a policy, program or intervention.

An evaluation rubric brings together a set of criteria (aspects of performance) and standards (levels of performance), as a scaffold to support the process of making evaluative judgements from evidence. See my earlier post for some basic principles.

There are unlimited different ways to structure a rubric. Let’s take a look at some examples, and some considerations for choosing between them. What follows isn’t an official taxonomy. They’re just three broad options that seem to be flexible enough to fit a range of different situations. I’ll label them generic, analytic, and holistic.

Generic rubric

A generic rubric is a rating scale that’s defined in a sufficiently broad way that you can use it to evaluate just about anything. The picture below gives some examples. These examples each have four levels of performance or value, but a rubric can have any number of levels. Also, we don’t have to use terms like excellent, good, adequate and poor. For instance, we can define levels of growth instead, like the seed-to-flower metaphor pictured below. What matters is that the levels have clear definitions.

Essentially, a generic rubric is a defined set of standards. We need to use these standards in combination with a defined set of criteria. We apply the generic standards systematically, to give each criterion a rating. Then, if desired, we can synthesise the individual ratings to make a judgement across the criteria collectively. Here’s an example, based on a hypothetical program from one of my Evaluation and Value for Money workshops.

Analytic rubric

An analytic rubric is a matrix of criteria and standards. It contains program-specific detail about each level of performance for each criterion. Here’s an example, adapted from an article I wrote in the American Journal of Evaluation. This one has five criteria and four standards, for a total of 20 descriptions of what the different aspects would look like at different levels of performance.

Holistic rubric

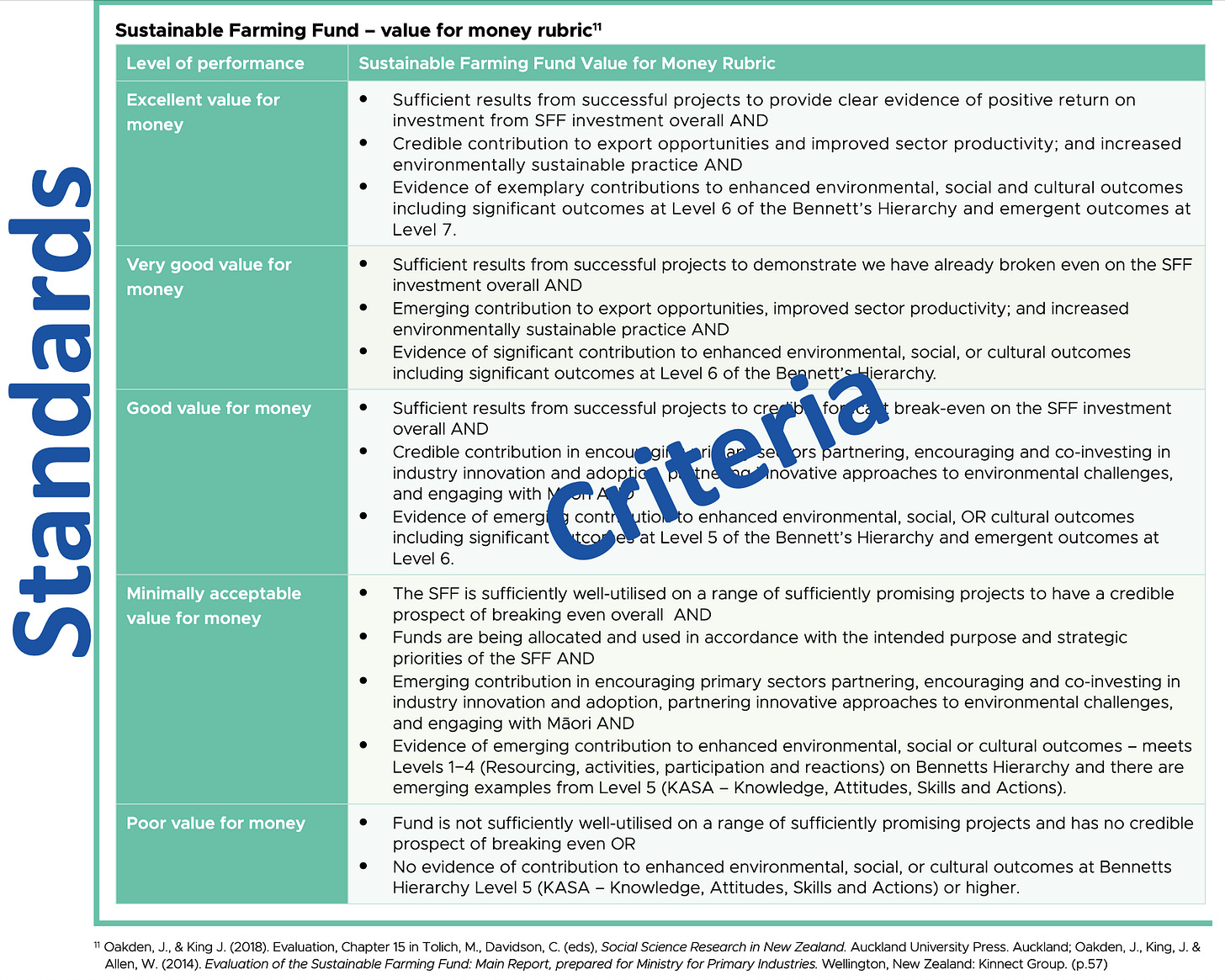

A holistic rubric also provides program-specific detail for multiple criteria at multiple levels of performance. But in contrast to an analytic rubric, the holistic rubric groups the criteria together, to describe sets of expectations or aspirations that would have to be demonstrated collectively to support a judgement at a particular level of performance. Here’s an example from the Kinnect Group’s Evaluation Building Blocks guide.

How to choose?

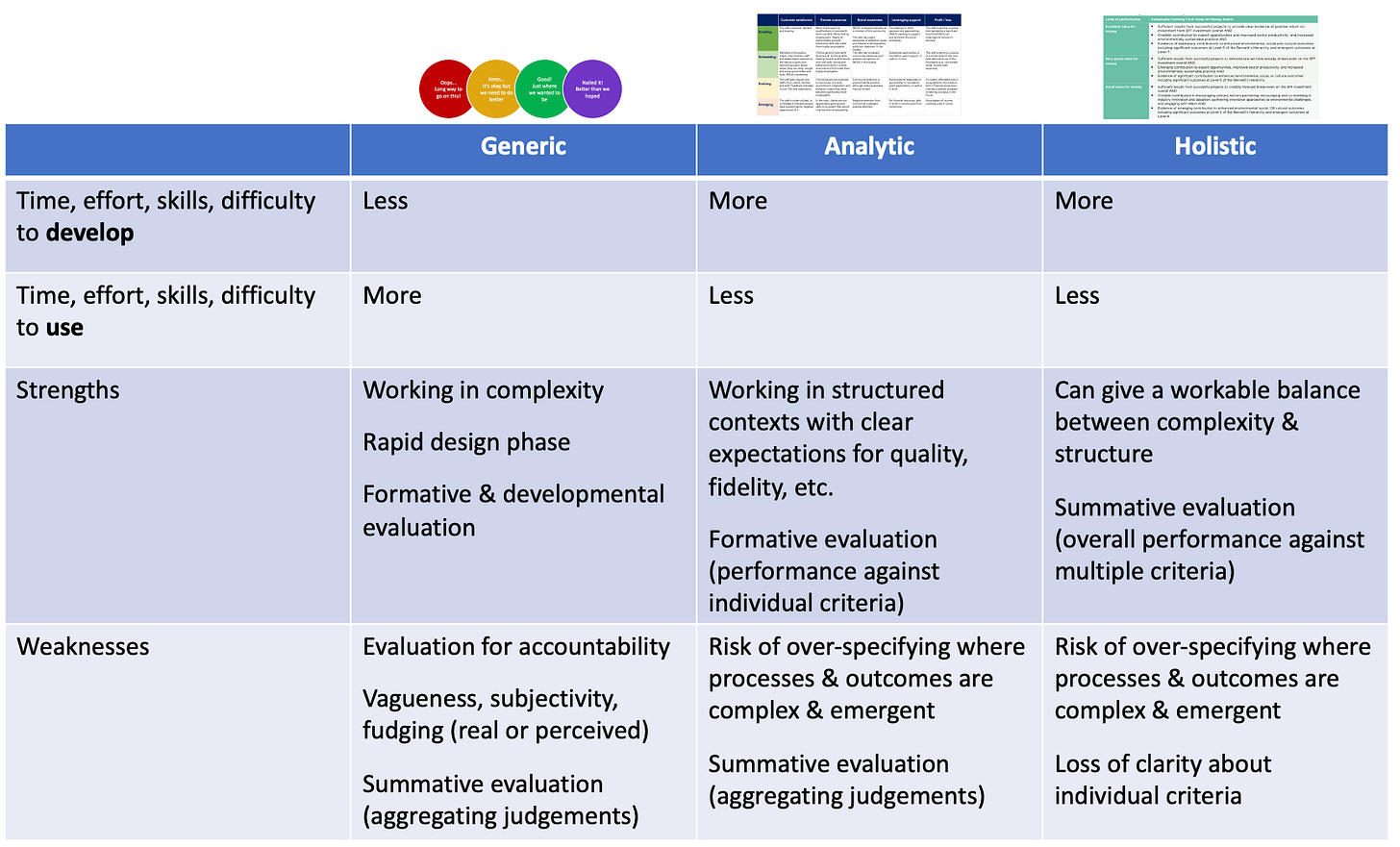

Any of these three kinds of rubrics can work in any setting, and there’s no clear ‘best’. However, they do have different pros and cons that might make them better-suited to some situations than others.

For example, generic rubrics are easier to make but harder to use in the sense that they don’t provide the specific, detailed guidance that comes from putting the work into developing analytic or holistic rubrics. On the other hand, generic rubrics are more flexible for making appropriate judgements in complex, emergent environments where the details of specific processes or outcomes may not be knowable in advance.

There are also trade-offs between analytic and holistic rubrics, depending whether your main purpose is formative or summative evaluation. Analytic rubrics are well-suited to formative evaluation where we want to consider each criterion separately to identify strengths and areas for improvement. Holistic rubrics provide extra clarity for combining the evidence across all criteria to judge the overall value of the policy or program.

Here’s a quick summary of some key trade-offs.

My go-to format

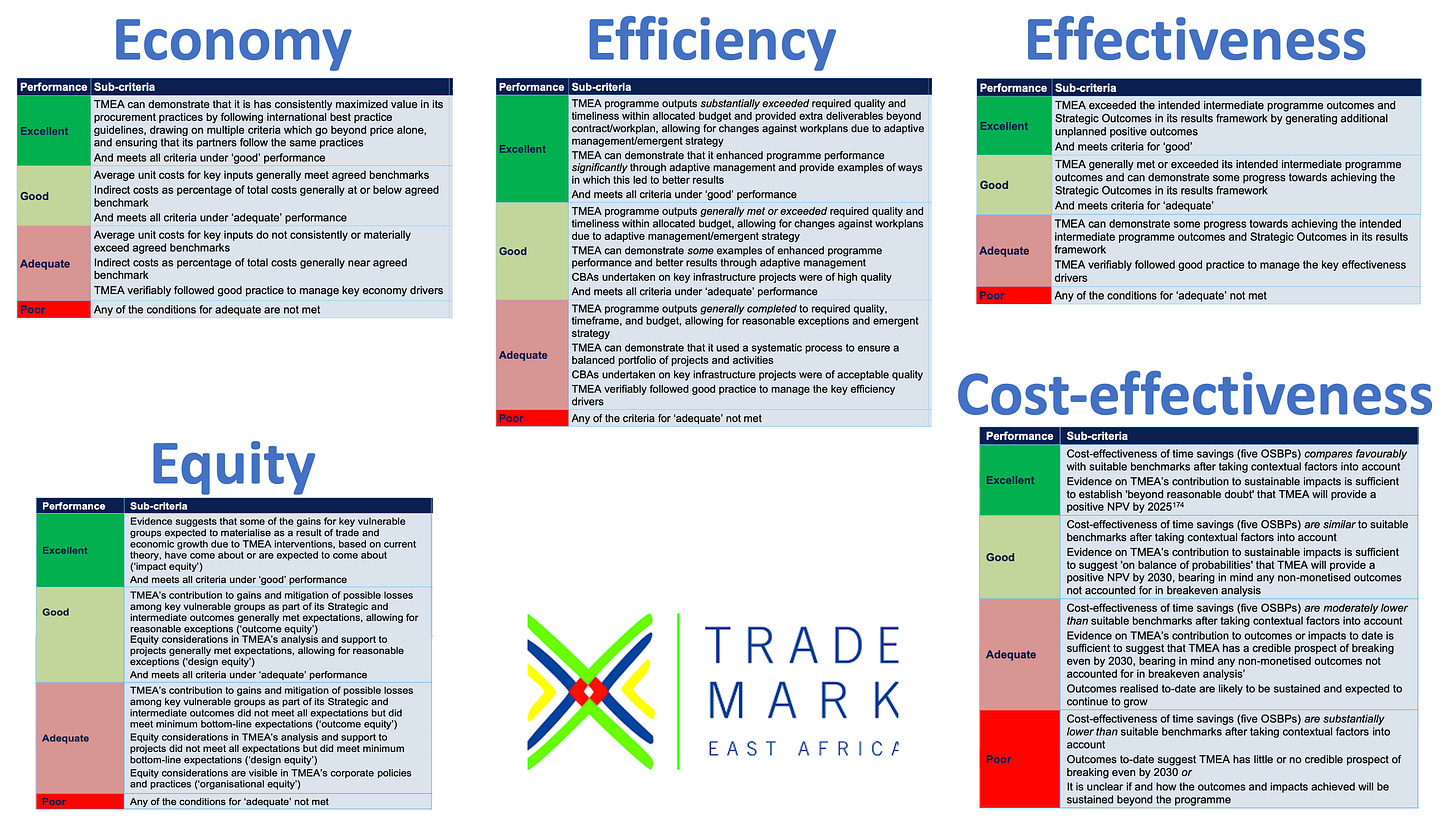

The three rubric types above aren’t discrete, mutually exclusive options. In value for money (VfM) assessment, my preferred option is often to combine elements of all three. Here’s an example of what that can look like.

From generic rubrics, we use the following generic definitions of excellent, good, adequate and poor value, in the table below. We use these definitions in two ways:

When developing program-specific rubrics, we align the levels of performance and value with the generic definitions - so, for example, in every evaluation, good always means “generally meeting reasonable expectations” and adequate means “fulfilling minimum requirements”. In this way, though every evaluation uses bespoke rubrics, there’s an underlying consistency to them.

When making evaluative judgements, if we find ourselves unsure, stuck between two levels, or debating how to balance multiple sub-criteria, we refer back to the generic definitions for big-picture guidance.

From analytic rubrics, we take the clarity that comes from separating out the criteria. So for example, if using the 5Es as VfM criteria, we would work sequentially, making separate judgements for economy, efficiency, effectiveness, cost-effectiveness, and equity, before combining them to judge VfM overall. This helps us to keep our analysis and reasoning well organised, and systematically identify strengths and areas for improvement.

And from holistic rubrics, we take the idea that each of the overarching criteria (e.g., each E) has a cluster of sub-criteria that have to collectively be met to attain a particular level of performance. In essence, each E has its own holistic rubric.

When we combine these elements of generic, analytic and holistic rubrics, what we get is a series of holistic rubrics, one for each criterion. It’s analytic in the sense that it breaks down the five criteria, generic in the sense that the levels of performance and value are defined in a consistent way, and holistic in the sense that within each rubric, multiple considerations make up the definitions of each level of performance for each criterion. Here’s an example.

This approach gives us a practical framework for systematically assessing VfM. Each rubric is sufficiently detailed and meaningful to guide appropriate judgements and we can keep them to a manageable size. The VfM report can address each criterion sequentially which makes it logical to navigate, as well as presenting a judgement on VfM overall.

This structure works well in VfM assessments, which are often driven by donors’ accountability imperatives and where we also want to support wider purposes, such as stakeholder engagement in the evaluation process for utilisation, empowerment, learning, adaptation and improvement. This structure provides a workable balance between these objectives. If you’re working in a different environment, your balance may be different.

Of course, as I’ve mentioned before, the 5Es are a choice, not a requirement. This format can be used with any set of criteria.

“Our kaupapa doesn’t fit in boxes”

These words still ring in my ears, more than ten years after a workshop with Māori colleagues and stakeholders, aimed at developing a rubric to support programmatic self-evaluation and decision-making. The notion of a rubric initially failed to resonate with stakeholders because our starting point was a rectangular table, the default format that’s easy to make in Microsoft Word or PowerPoint. Lesson learnt!

One of the strengths of rubrics is that they provide a visual representation of criteria and standards, which helps to make the foundations of the evaluation accessible and resonant with stakeholders. To this end, we shouldn’t limit ourselves to boring tables. Here are some examples of rubrics that refuse to be boxed in by default word processing tools.

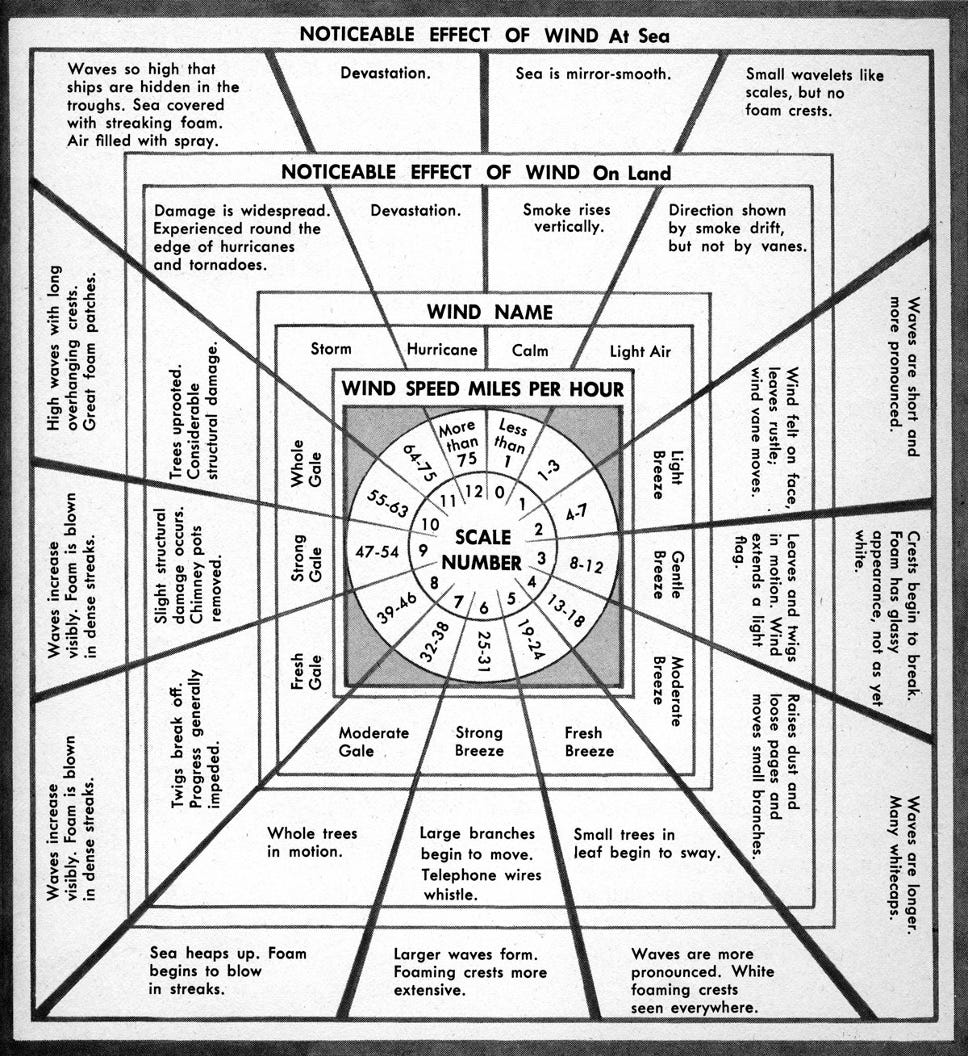

Orbic - like a spider’s web

The beautiful Beaufort Scale (again). Because I love it.

Asterisc - like a star

Sport and Recreation New Zealand’s Guiding Star, Te Whetu Rehua (again) - helping sport and recreation providers consider how they might design or adapt activities to be culturally responsive to Māori. Adding value for over ten years and still going strong.

Escalieric - like a staircase

Honestly, I don’t know if I’m Latin-ing right. I’m just riffing off things that end in -ic.

In Māori-led evaluations, the stepped pattern and symbolism of Poutama have been incorporated in rubrics. I don’t have a real example to share but here’s an illustration of the general concept…

Cephalopodic - like a nautilus shell

I don’t have an example of a rubric like this. But now that I’ve thought of it, I definitely want to make one! As the nautilus grows, it adds bigger chambers to the spiral. What a beautiful growth metaphor for a rubric.

How about you?

What’s the most unusual, memorable or beautiful rubric you’ve seen? What’s your go-to rubric structure?

I feel the "orbic" (spider webs) representation of rubrics offers very interesting potential for aggregation of data and for its representation and analysis when working in complex programmes with different layers/levels of engagement (such as consortium, country, location, or portfolio, programme, project) that last several years.

The general categories of the rubric, for example, could be described with distinct criteria for each of the levels (to make them fit better the work done at each of the levels). In fact, categories could even be disaggregated in sub-categories, which are then used selectively for each of the level, depending on how relevant they are. These sub-categories could evolve as a programme advances, with some being discarded and others added to match what is relevant for the programme at a given moment. Even if they evolve, they would contribute to assess the general (more stable) categories.

If data is collected regularly at the different levels of operation, very interesting aggregation of the data could then be done, which a spider web diagram could represent in a very intuitive way, as coloured lines around the center. This would allow comparing the results for the different layers, different locations, the evolution through the years, etc., in a very intuitive way that normal human beings would be able to interpret, or use as a starting point for deliberations.

I haven't found a tool that allows to do such data collection, aggregation, and representation easily -for sure not Excel-... but the potential is clearly there!

If anybody has any suggestion... please share.

Cephalopodic could be a thing. I initially thought of an Octopus based one (Te Wheke - Rose Pere)