AES23 highlights

What happens in Brisbane stays on Substack

Kia ora koutou! This post shares a few thoughts from the Australian Evaluation Society Conference in Brisbane last week. My biggest take-home is a tacit one: It recharged my battery. I love being a part of this community, making new connections and catching up with old friends, some of whom I only get to see once a year. There were many good presentations - and still, my favourite part of conferences is the interstitial spaces between presentations and the chance conversations they bring.

If I had to pick just one slide that resonated for me (so much my brain is still humming), it’s this, from Katherine Trebeck (though interestingly, she also shared other images that I would have been happy not seeing).

A lot has been said about the conference already, so I asked GPT3.5 to synthesise attendees’ social media posts from LinkedIn and X. First, I asked it:

What aspects of #aes23BNE did people value most?

The GPT answered:

“Based on the social media posts from the conference, several aspects of the event were highly valued by participants:

Cultural Sensitivity and Indigenous Perspectives: The emphasis on cultural sensitivity, Indigenous perspectives, and First Nations lifeworlds in evaluation practices was highly valued. Attendees recognized the importance of respecting and incorporating Indigenous values and methodologies into evaluations. Distinguished Professor Emerita Maggie Walter's keynote on this topic received significant attention and was seen as a pivotal aspect of the conference.

Inclusivity and Ethical Engagement: People highly valued discussions around inclusivity and ethical engagement, particularly concerning the engagement of people with lived experiences in evaluations. The focus on trauma-informed approaches, as well as the practical advice and workshops on ensuring ethical practices, resonated with participants. It was seen as a critical element in promoting a more just and equitable evaluation process.

Complex Systems and Adaptability: The discussions on addressing complex societal challenges, such as the global poly-crisis and climate change, were highly valued. Participants recognized the need for evaluators to be adaptable and systems thinkers in order to effectively address these challenges. The integration of theoretical thinking with practical approaches was appreciated, as it provided concrete tools for evaluators to navigate complex issues.

Power and Transformation: Many valued the conference's focus on recognizing and reckoning with the power dynamics in evaluation. Donna Mertens' challenge to evaluators to be perpetually reflexive and her hope for evaluation as a tool for transformation resonated with attendees. The discussions around how evaluators can use their power to drive positive change were seen as thought-provoking and inspiring.

Networking and Connection: The conference provided a valuable opportunity for attendees to network, connect, and share experiences with colleagues in the field. These connections were seen as powerful resources for personal and professional growth and for collaborating on future evaluation projects.

Overall, the conference's value was derived from its ability to foster meaningful discussions, encourage cultural sensitivity, and offer practical tools for evaluators to navigate complex challenges while maintaining a strong ethical and inclusive stance. It provided a space for personal and professional reflection and growth, contributing to the ongoing development of the evaluation field.” (GPT3.5).

Next, I asked the GPT:

How did #aes23BNE make people feel?

This question is probably a stretch for a LLM tbh but I was curious to see how it would answer. Here’s what it said.

“The conference evoked a range of emotions among participants, as reflected in the social media posts and comments:

Inspired: Many participants expressed feeling inspired by the conference. They were inspired by the thought-provoking keynote speakers, the emphasis on ethical and inclusive evaluation practices, and the discussions on addressing complex societal challenges. The conference seemed to have ignited a sense of purpose and motivation among attendees.

Challenged: Attendees noted that the conference challenged their assumptions and perspectives. Keynote speakers like Donna Mertens and Maggie Walter challenged evaluators to reflect on their practices, consider power dynamics, and rethink traditional evaluation approaches. This challenge was seen as a positive aspect, as it encouraged personal and professional growth.

Grateful: Several posts expressed gratitude for the opportunity to attend the conference and engage in discussions about evaluation, culture, ethics, and inclusivity. Participants were thankful for the connections made, the new insights gained, and the chance to learn from experts in the field.

Empowered: The conference seemed to empower attendees to make positive changes in their evaluation practices. The focus on Indigenous perspectives, ethical engagement, and transformative evaluation inspired a sense of agency and empowerment among evaluators to create more just and equitable evaluations.

Connected: Many posts highlighted the value of connecting with peers and colleagues in the evaluation field. The sense of community and the opportunity to share experiences and insights with like-minded professionals contributed to a feeling of connectedness.

Hopeful: Despite discussions about complex challenges and power dynamics, there was a sense of hope among participants. The focus on transformation, ethical engagement, and cultural sensitivity provided a sense of hope for positive change in the field of evaluation.

In summary, the conference appeared to have left participants feeling inspired, challenged, grateful, empowered, connected, and hopeful. It provided a platform for deep reflection and discussion on important topics in the field of evaluation, evoking a range of positive emotions.” (GPT3.5).

Does it resonate for you?

A note about large language models

It’s worth taking a moment to reflect on how autoregressive language models like GPT3.5 work. They aren’t sentient and they’re not ‘intelligent’ in the ways people are intelligent. They’re just good at one kind of math - so good they can use it to process language.

An autoregressive model is a statistical model that predicts future numbers based on past numbers in a time series (I had to pass an exam on them once, as part of an econometric forecasting course, which at least gives me a rough analogy to simplistically imagine what goes on behind the scenes in GPT3.5). Autoregressive language models predict what word could come next in a sequence, based on the previous words in the sequence and the probability distribution of the next word. The model is trained by minimising the difference between the predicted probability distribution and the actual next word, for a ridiculously large number of training examples (like, the whole internet).

Using this training, the model can generate new sequences of text, one word at a time, by sampling from the predicted probability distribution at each step. This means the model chooses the next word based on the probability distributions of all possible words, and then uses that word as input to predict the next word in the sequence, and so on, and so on. As the model generates more and more words, it continues to use the words it just generated as more input. In this way, the model captures long-term dependencies in the sequence of words and generates coherent and plausible-sounding (if somewhat bland) bodies of text.

Bearing this in mind, I think it’s pretty amazing that GPT3.5 could process our collective social media posts to produce these summaries. I feel it picked up on valid points but expressed them in language that sometimes felt a bit platitudinous. I’d love to see LLMs experiment with edgier, riskier language instead of highest-probability cliches. Room for improvement, I’d say. What do you think?

A couple of things it didn’t pick up on

In addition to the positive sentiments the GPT summarised, I also clocked from some people a sense of overwhelm and despondency at the depth and complexity of the social, cultural, environmental and economic problems and our capacity to help address them in meaningful ways.

There weren’t many mentions online about the Hon Dr Andrew Leigh’s address and related panel conversation. Here’s a great reflection from the session chair. I couldn’t attend this session unfortunately, but I did listen to a recording of the Minister’s speech and I heard that the panelists and Slido participants made some great points. If anybody has access to a recording of the full session please drop it in the comments!

I was pleased to hear the Assistant Minister cite Peter Attia (I’m also a fan of Peter’s work) and will confess to feeling patronised and grumpy that he would evalsplain the basic principles of randomised controlled trials to the professional evaluation community. Like dude, we know. We also know the contexts we work in.

We know that we can’t control our way out of complexity. We know that RCTs are not always desirable, feasible or necessary, and are too limited on their own to represent a complete evaluation of a complex social investment. We’re concerned about the risks of applying rigid evidence hierarchies in ways that could marginalise or displace more contextually appropriate paradigms. We hope we won’t see this happen. So let’s all work together to promote high quality, fit-for-purpose evaluations.

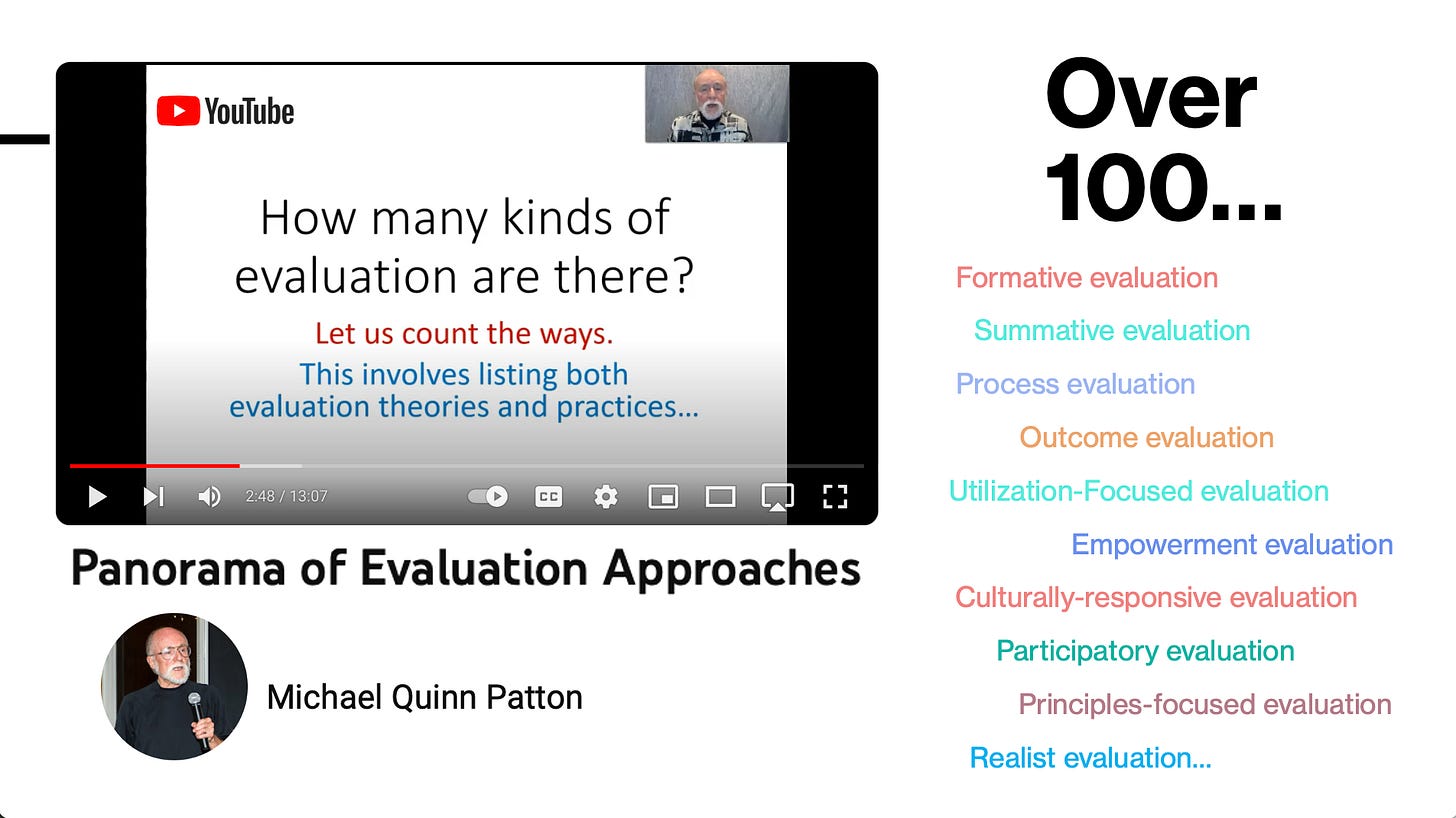

For my 2c worth, I think the opportunities are worth the risks. Having this level of attention paid to evaluation in the corridors of power is awesome even if it is also scary. I expect and hope that we will be able to build a constructive working relationship with ACE so that we can help shape how government understands and engages with our field of practice. Similar to my perspective on cost-benefit analysis, in my view experimental and quasi-experimental research designs have the capacity to bring unique insights and answer important questions. They’re valid components of our discipline but we mustn’t place them on pedestals. Study designs need to be contextual and negotiated. Neither RCTs nor CBA are complete evaluations. We need to make evaluative judgements from a broader base of evidence, axiologies, epistemologies and lifeworlds. For that we need mixed methods, mixed designs, bricolage, and cubist evaluation. What’s that? Keep reading…

I was involved in four presentations. Here’s what we talked about…

1. Doing rigorous work in complexity

Kate McKegg organised a panel on Adapting and embracing new conceptions of rigour for evaluation practice in complexity. Panelists included Kate, Samantha Togni, and Keira Lowther. The idea for the panel came out of a blog post that Kate and Keira wrote, which you will find here. We wanted this to be a participatory session, and it was!

As Kate wrote in the abstract…

As commissioners, funders and evaluators find themselves working more often in complex situations; it’s coming to our attention that the dominant conceptions of rigour we’ve been trained in and that we are trying to use in research and evaluation are no longer a helpful fit for complexity.

Some of us feel guilty at not living up to the standards of rigour we’ve been trained in and feel frustrated at the disconnect and the insistence from some of those far from our work to comply with tenets of rigour that don’t fit comfortably with complexity and our socio-cultural realities. But what else is there?

This panel will reflect on what might count as rigour in complexity for evaluation in 2023 and beyond, focusing on what it takes to adapt and embrace new conceptions of rigour and evaluative practices that embody new ways of thinking and practising. The panellists will address the challenges to western conceptions of rigour that have been mounted by many over several decades and consider what is likely to resonate for commissioners, funders, evaluators and communities in the many complex settings and contexts we find ourselves evaluating, often at the interface of diverse knowledge systems. Questions such as who defines rigour, through what lens, and for what purpose in these complex settings will be considered as we grapple with ensuring evaluation remains relevant, useful and fit of purpose.

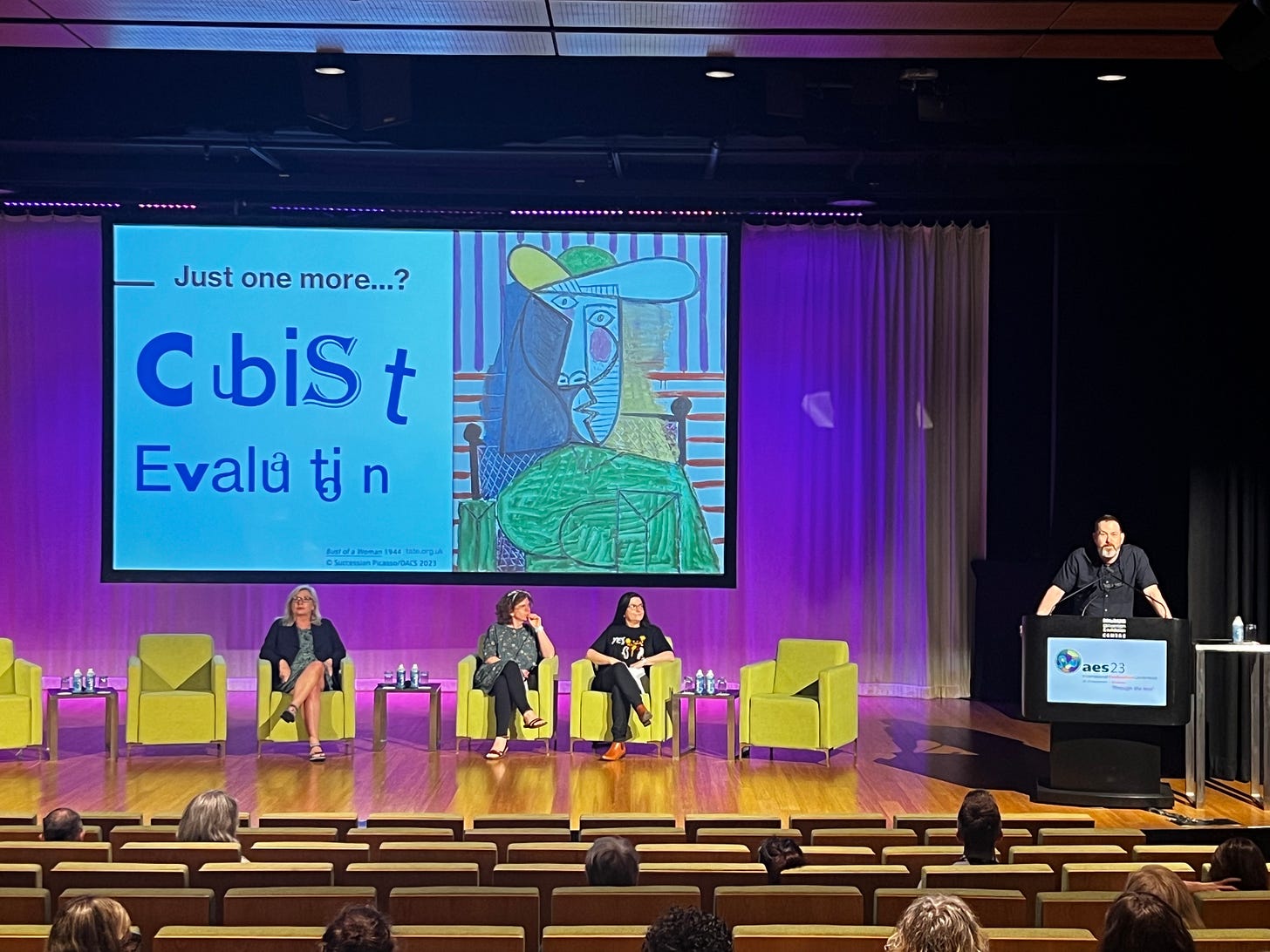

Cubist evaluation

I only have my own slides and I won’t even attempt to summarise my co-panelists’ incisive ideas in a nutshell. Here I’ll just share my contribution.

The early 20th Century Cubist art movement represented a paradigm shift that has had enduring influences on art, architecture and film - and maybe it’s time for evaluation to draw inspiration from Cubism too…

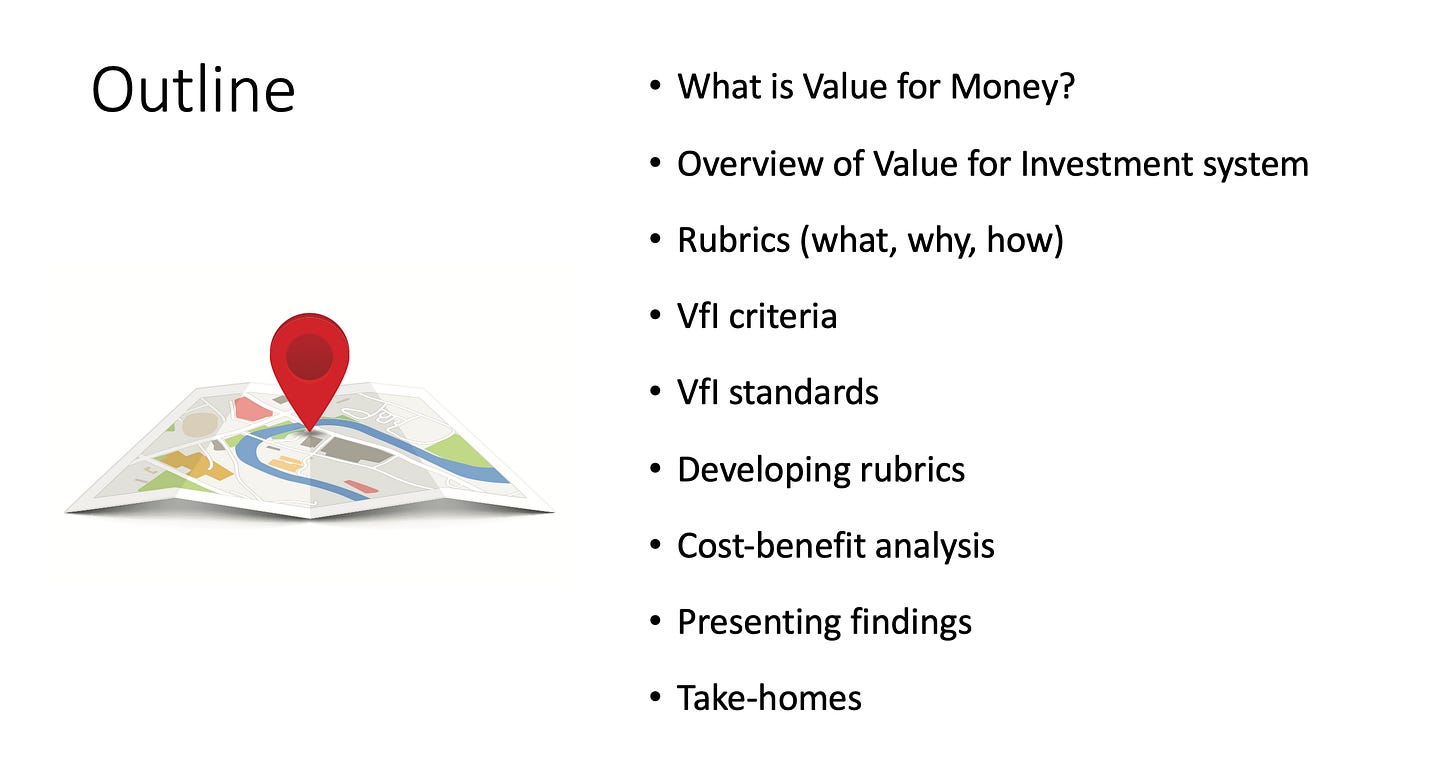

2. Half-day workshop on evaluation and value for money

I debuted my new half-day training workshop: Evaluation and Value for Money - An inter-disciplinary, participatory approach using evaluative reasoning and mixed methods. As always, it was a privilege and a treat to be able to spend time with colleagues. I love to share this material and I always learn a lot at the same time.

The workshop provided practical guidance, underpinned by a sound theoretical framework, for evaluating VfM. It unpacked a process of explicit evaluative reasoning (using rubrics), mixed methods and participatory evaluation, combining insights from evaluation and economics.

This new workshop is available for all evaluation societies and private in-house training workshops. Contact me to find out more.

3. Participatory evaluation design and sense-making: making meaning through co-constructed lenses

In this presentation, with colleagues Dr. Kerry Elliott and Dr. Hilary Hollingsworth from the Australian Council for Educational Research, we shared experiences from two recent projects where we took participatory approaches to exploring value for investment with stakeholders. Last week we shared a post based on the presentation, which you can read here. Key take-home:

4. Applying a Value for Investment lens in a National Youth Primary Mental Health Initiative

Dr. Adrian Field and I, together with Jane Maloney via pre-recorded video, shared the story of evaluating New Zealand’s national Youth Primary Mental Health and Addictions initiative.

The initiative invested $45 million in expanding access to, and choice of, support for young people aged 12 to 24 years of age experiencing mild to moderate mental health or addiction distress.

The evaluation addressed three Key Evaluation Questions (KEQs):

Using the Value for Investment approach, we explored how value is being created through Youth PMHA and its performance against the following criteria:

Looking after resources equitably and economically - by expanding existing knowledge, expertise and flexibility of service delivery through contracting

Delivering services equitably and efficiently - by breaking down access barriers, offering more support options and tailoring interventions to reach different groups of young people

Generating social value equitably and effectively - by supporting young people and their whānau (families) and enabling effective system-wide resource use.

Dovetail Consulting has prepared a blog post on this evaluation, which you can read on their website. We also prepared a guidance document for evaluators which was the subject of a recent Substack post. There’s more I’d like to share on this, which I’ll save for next week.

Hearing about AES23 feels like hearing about a party all my friends we're at yet I couldn't make.

Cheers for the writeup, Julian. The metronome is absolutely on point. Seems we agree on many things. Evaluation is not a toolbox of psuedo-scientific methods but a mix of mindset and methods orientated towards self-reflexive understanding and communication across/within societal actors.